Thorium

Thorium is a highly scalable, distributed malware analysis and data generation framework. Thorium is designed to make cyber incident response, triage, and file analysis easier through the safe ingestion and storage of data, automation of analyses and easy access to stored analyses and metadata. Because of the sensitivity and potential maliciousness of data handled within Thorium, uploaded files are placed into an encrypted/neutered format called CaRT. After initial file upload, all analysis is conducted in sandboxed environments where protective measures and sanitization steps can be easily applied.

Getting Started

This guide will cover how to start using Thorium to analyze data at scale.

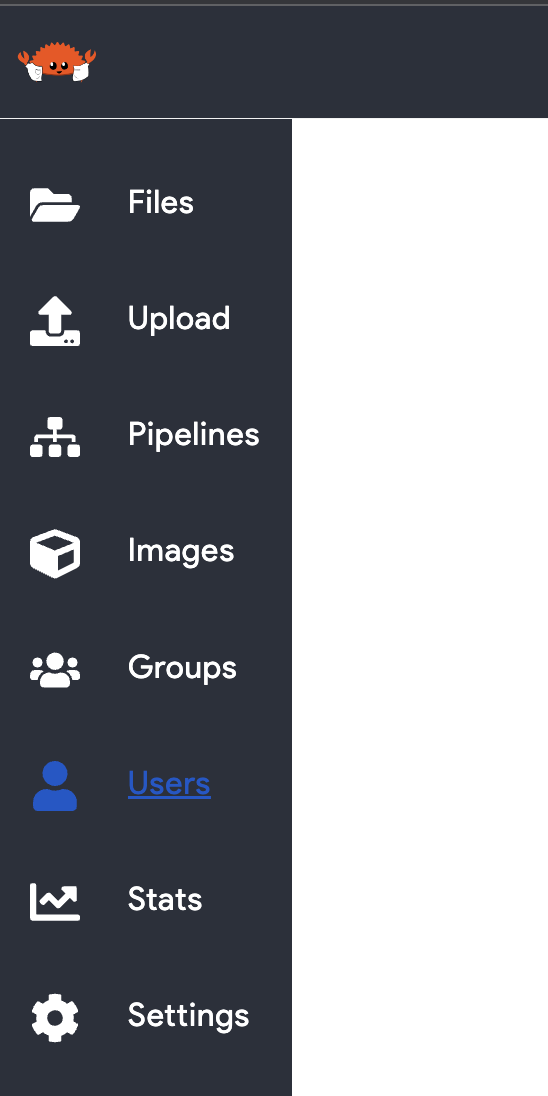

Most tasks in Thorium can be accomplished in both the Web UI and our command line tool called Thorctl (pronounced Thor-cuddle). The interface you choose to use will depend on the number of samples or repositories you are working with and whether a browser client is accessible within your own analysis environment. If you need to analyze hundreds or thousands of samples, you can utilize Thorctl to upload the data, run analysis jobs, and download then results of those jobs. Thorctl is also useful when working on a “headless” analysis environment where only a command line interface is at your disposal. Alternatively, the Web UI provides an easy way to upload, analyze, and view a smaller number of samples and repos for those with access to a browser client. The Web UI also provides the ability create groups and modify group membership.

If you have already registered for a Thorium account you can skip to the Login section of this chapter.

Registering For A New Thorium Account

Before you can login to Thorium you need to register for an account. This can be done through the Web UI by clicking the register button on the login page. Then enter your username and password. If your Thorium instance is using LDAP then use your LDAP password, otherwise you may create your new password here.

Logging Into Thorium

Most tasks in Thorium require you to be authenticated. Both the Web UI and Thorctl will also require occasional reauthentication as your token or cookie expires. The following videos demonstrate how to use our two client interfaces to login to your Thorium account.

Web UI

You will automatically be sent to the login page when you initially navigate to Thorium using your browser, or when your token expires while browsing Thorium resources. To login via the Web UI, just enter your username and password as shown in the video below and then click login. Once you (re)login, you will automatically be redirected to your home page for a new login, or back to your previous page in the case of an expired cookie.

Thorctl

To login with Thorctl you will first need to download the executable. To download Thorctl, follow one of the guides below based on your operating system type.

Download

Linux/Mac

Run this command on the Linux system that you want Thorctl to be installed on.

Windows

Download Thorctl from this Windows Thorctl link.

Login

After you have downloaded Thorctl you can authenticate to Thorium by running:

Enter your username and password when prompted and you should get a success message as shown below:

Roles, Permissions, and Ownership

Thorium uses groups to grant access to resources and role-based permissions to limit the ability of individuals to conduct certain operations on those resources.

Resource Ownership and Access

All resources within Thorium including files and analysis pipelines are owned by the person that created them,

but uploaded to a group. Only group members and those with the Admin system role can access a group’s resources or

even know that a particular resource like a file has been uploaded. This explicit groups-based access model helps to

prevent information leakage and supports multitenancy of the Thorium system. Different groups can use the same Thorium

instance without risking sensitive data being leaked across groups. In order for a user to have access to resources,

such as files, the user can be added to that resource’s group or the resource can be reuploaded to one of the user’s

existing groups.

Roles

Roles within Thorium are scoped at two different levels: System and Group. The capabilities granted by Group roles

apply to resources within a specific group while System roles apply globally.

System Roles

System roles primarily exist to limit individuals from conducting certain sensitive actions at a global level. Since

anyone with a Thorium account can create their own groups, there is no practical way to limit certain actions using

only Group roles.

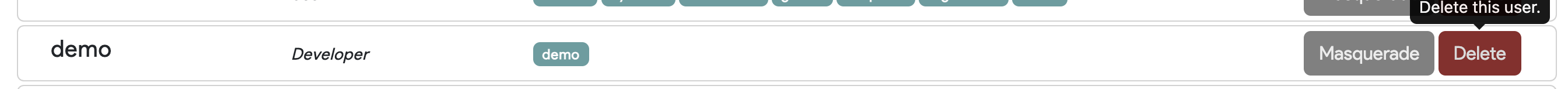

A Thorium account will only have one System role at a time: User, Developer, or Admin. When you first register

for an account, you are granted the User system role by default. This will allow you to conduct analysis within

Thorium, but does not allow you to create new analysis pipelines or give you any privileged access to data outside of

your groups. If your interactions with Thorium require you to add or modify existing pipelines or tools (called

images), you will need a Thorium Admin to give you the Developer role. The Developer role is considered a

privileged role because it effectively allows an account holder to execute arbitrary binaries/commands within

Thorium’s sandboxed analysis environments.

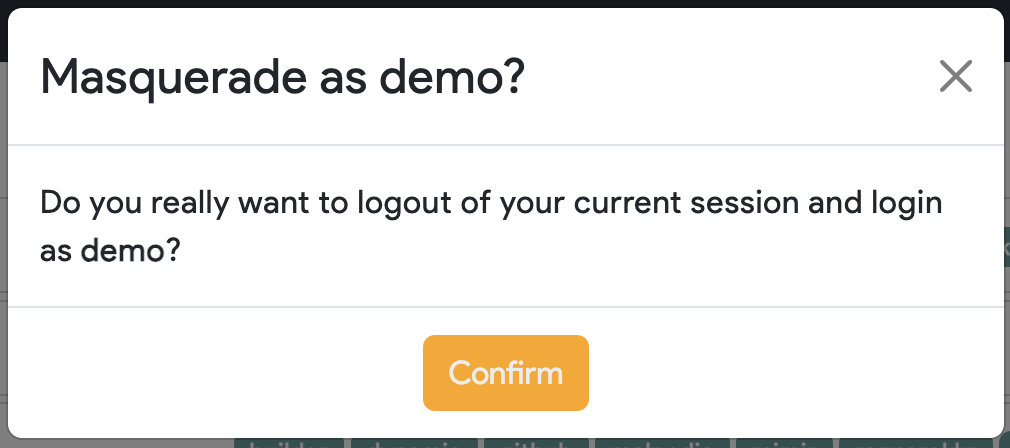

The Thorium Admin role grants access to view and modify all resources within Thorium, irrespective of the resource’s

group. In contrast, a User or Developer must still have the correct group membership and group role if they plan on

using the resources of that group. Each Thorium deployment should have at least one person with the Admin system role.

Admins help to curate the data hosted in Thorium and provide continuity when group members leave the hosting

organization.

The following table summarizes the abilities granted by Thorium’s three System level roles and any limitations that

apply to those granted abilities:

| System Role | Abilities | Limited By |

|---|---|---|

| User | Can create groups and run existing pipelines, but cannot create or modify pipelines or images. | Must have sufficient group role and group membership |

| Analyst | Can create groups, and can add, modify, and run analysis pipelines and images. Has global view into all data in Thorium. | None |

| Developer | Can create groups, and can add, modify, and run analysis pipelines and images. | Must have sufficient group role and group membership |

| Admin | Can access, view, and modify all resources, change group membership and update System and Group roles. | None |

You can view your System role on the profile page, as shown below.

Group Roles

Group roles control your ability to conduct certain operations on the group’s resources. Group resources can include

images, pipelines, repos, files, tags, comments, and analysis tool results.

There are four group roles: Owner, Manager, User, and Monitor. Anyone with a Thorium account can create their

own groups. When you create a new group, you are automatically added as an Owner of the group. When you are added to

an existing group your role within the group will be assigned. Group roles and their associated capabilities are

defined in the following table.

| Ability | Owners | Managers | Users | Monitors |

|---|---|---|---|---|

| View Resources | yes | yes | yes | yes |

| Run Pipelines | yes | yes | yes | no |

| Upload/Create Resources1 | yes | yes | yes | no |

| Modify Resources1 | all | all | self created only | no |

| Delete Resources | all | all | self created only | no |

| Group Membership | add/remove any member | add/remove non-owner members | read only | read only |

| Delete Group | yes | no | no | no |

-

For pipelines and images this ability also requires a

DeveloperorAdminSystemlevel role. Without the correctSystemrole, you will not be able to modify or create pipelines or images even if you have the correct group role (Owner,Manager, orUser). However, you will still be able to run existing pipelines that otherDevelopershave added so long as you are not aMonitor. ↩ ↩2

Creating/Editing Groups

All resources within Thorium including files, analysis pipelines, and tools are owned by a user and uploaded to a group. Access to modify group resources is granted by your role within the group. If you want to learn more about Thorium’s roles and permissions system, you can read this page. The ability to manage group membership and create new groups is only available in Thorium’s Web UI.

WebUI

To create a new group follow the steps in the following video:

You may have noticed that you can add users to different group roles. As we described in the previous chapter, group roles are how you define the abilities a group member has within the group. Roles and their abilities are defined in the table below. Group resources can include images, pipelines, repos, files, tags, comments, and tool results.

| Ability | Owners | Managers | Users | Monitors |

|---|---|---|---|---|

| View Resources | yes | yes | yes | yes |

| Run Pipelines | yes | yes | yes | no |

| Upload/Create Resources | yes | yes | yes | no |

| Modify/Delete Resources | all | all | self owned only | none |

| Group Membership | add/remove any member | add/remove non-owner members | no | no |

| Delete Group | yes | no | no | no |

A Thorium user can be added to a group either as a direct user or as part of a metagroup. This functionality allows you to use an external group membership system (ie LDAP/IDM) to grant access to Thorium resources.

| type | description |

|---|---|

| direct user | A single user in Thorium |

| metagroups | A group of users that is defined in LDAP |

By default, metagroup info is updated every 10 minutes or when a Thorium group is updated. This means that when a user is added or removed from a metagroup it may take up to 10 minutes for that change to be visible in Thorium via the Web UI.

Thorctl

Thorctl is a command line tool aimed at enabling large scale operations within Thorium. Thorctl provides a variety of features including:

- uploading files

- uploading Git repositories

- ingesting Git repositories by URL

- downloading files/repos

- starting reactions/jobs

- starting Git repo builds

- downloading results

- listing files

An example of some of these can be found in the Users section of these docs.

To install Thorctl, follow the instructions for your specific operating system in the sections below.

Linux/Mac

On a Linux or Mac machine, open a terminal window and run the following command:

Insecure Download

- Although not recommended, you can bypass certificate validation and download Thorctl insecurely

by adding the

-k(insecure) flag tocurland--insecureat the very end of the command (see the command below for reference). The former tellscurlto download the script itself insecurely while the latter will informs the script to use insecure communication when downloading Thorctl.

Windows

Download Thorctl from the following link: Windows Thorctl

Login Via Thorctl

After you have downloaded Thorctl, you can authenticate by running:

Configure Thorctl

Logging into Thorium using thorctl login will generate a Thorctl config file containing the

user’s authentication key and the API to authenticate to. By default, the config is stored

in <USER-HOME-DIR>/.thorium/config.yml, but you can manually specify a path like so:

thorctl --config <PATH-TO-CONFIG-FILE> ...

The config file can also contain various other optional Thorctl settings. To easily modify the config

file, use thorctl config. For example, you can disable the automatic check for Thorctl updates

by running:

thorctl config --skip-updates=true

You can specify a config file to modify using the --config flag as described above:

thorctl --config <PATH-TO-CONFIG-FILE> config --skip-updates=true

Thorctl Help

Thorctl will print help info if you pass in either the -h or --help flags.

$ thorctl -h

The command line args passed to Thorctl

Usage: thorctl [OPTIONS] <COMMAND>

Commands:

clusters Manage Thorium clusters

login Login to a Thorium cluster

files Perform file related tasks

reactions Perform reactions related tasks

results Perform results related tasks

repos Perform repositories related tasks

help Print this message or the help of the given subcommand(s)

Options:

--admin <ADMIN> The path to load the core Thorium config file from for admin actions [default: ~/.thorium/thorium.yml]

--config <CONFIG> path to authentication key files for regular actions [default: ~/.thorium/config.yml]

-k, --keys <KEYS> The path to the single user auth keys to use in place of the Thorctl config

-w, --workers <WORKERS> The number of parallel async actions to process at once [default: 10]

-h, --help Print help

-V, --version Print version

Each subcommand of Thorctl (eg files) has its own help menu to inform users on the available options for that

subcommand.

$ thorctl files upload --help

Upload some files and/or directories to Thorium

Usage: thorctl files upload [OPTIONS] --file-groups <GROUPS> [TARGETS]...

Arguments:

[TARGETS]... The files and or folders to upload

Options:

-g, --groups <GROUPS> The groups to upload these files to

-p, --pipelines <PIPELINES> The pipelines to spawn for all files that are uploaded

-t, --tags <TAGS> The tags to add to any files uploaded where key/value is separated by a deliminator

--deliminator <DELIMINATOR> The deliminator character to use when splitting tags into key/values [default: =]

-f, --filter <FILTER> Any regular expressions to use to determine which files to upload

-s, --skip <SKIP> Any regular expressions to use to determine which files to skip

--folder-tags <FOLDER_TAGS> The tags keys to use for each folder name starting at the root of the specified targets

-h, --help Print help

-V, --version Print version

Users

The Thorium user role is the default role for any newly created Thorium account. Thorium users can range from incident responders to malware reverse engineers or vulnerability researchers. With this role you can:

- upload files and Git repositories

- add and remove metadata tags on uploaded files and repositories

- run a pipeline on a file or repository (called a reaction)

- view reaction status and logs

- view tool results

- comment on files and upload comment attachments

- create new groups

Permissions that users do not have:

- create or modify tools (images) or pipelines

If you need to create or modify analysis pipelines, you may need to ask the admins of your Thorium instance to add you as a Developer.

Uploading Files

Now that you have access to Thorium, you may want to upload some files and run analysis tools on them. You can do that in either the Web UI or through Thorctl. When uploading a small number of files, the Web UI is usually preferable, while Thorctl is helpful in uploading many files or when a browser is not accessible.

When uploading files there are several options you may set that are described below. Groups is the only required

field. If you are not yet a member of any groups then follow the steps in the

Adding/Editing Groups section and come back afterward.

| Field | Description | Format/Accepted Values | Required |

|---|---|---|---|

| Groups | Limits who can see this file | One or more group names | yes |

| Description | A short text explanation of the sample and/or its source | Any valid UTF-8 formatted text | no |

| Tags | Key/value pairs to help locate and categorize files | Any key/value pair; both key and value are required | no |

| Origins | Specifies where a file came from | Downloaded, Transformed, Unpacked, Wire, Incident, or Memory Dump | no |

It is recommended that you provide origin information for any file(s) you upload whenever possible. A key feature of Thorium is its ability to store origin information in a structured format and automatically translate that information into metadata tags. Tags allow you to filter the files that you browse through when looking for a file. As a result, if you don’t provide any origin information, it may be difficult to locate your files at a later date.

File Origins

File Origins are the single most important piece of information in describing, locating, and understanding relationships

between files. Described below are all the options for file origins and their respective subfields.

Downloaded

The “Downloaded” Origin specifies that the file was downloaded from a specific URL.

| Subfield | Description | Format/Accepted Values | Required |

|---|---|---|---|

| URL | The URL the file was downloaded from | A valid URL | yes |

| Site Name | The name of the website the file was downloaded from | Any UTF-8 formatted text | no |

Transformed

The “Transformed” Origin specifies that the file is a result of transforming another file, whether by a tool or some other means.

| Subfield | Description | Format/Accepted Values | Required |

|---|---|---|---|

| Parent | The SHA256 of the original file that was transformed to produce this file | A valid SHA256 of an existing file in Thorium1 | yes |

| Tool | The tool that was used to produce this transformed file | Any UTF-8 formatted text | no |

| Flags | The tool command-line flags that were used to transform this sample | One or more hyphenated alphanumeric flags2 | no |

- Your account must have access to the parent file in order to specify it in a file’s origin

- Example:

--flag1, --flag2, --flag3, -f

Unpacked

The “Unpacked” Origin specifies that the file was unpacked from some other file, whether by a tool or some other means.

| Subfield | Description | Format/Accepted Values | Required |

|---|---|---|---|

| Parent | The SHA256 of the original file that this file was unpacked from | A valid SHA256 of an existing file in Thorium1 | yes |

| Tool | The tool that was used to unpack this file | Any UTF-8 formatted text | no |

| Flags | The tool command-line flags that were used to unpack this sample | One or more hyphenated alphanumeric flags2 | no |

- Your account must have access to the parent file in order to specify it in a file’s origin

- Example:

--flag1, --flag2, --flag3, -f

Wire

The “Wire” Origin specifies that a file was captured/sniffed “on the wire” en route to a destination.

| Subfield | Description | Format/Accepted Values | Required |

|---|---|---|---|

| Sniffer | The sniffer1 used to capture this file | Any UTF-8 formatted text | yes |

| Source | The source IP/hostname this file came from when it was sniffed | Any UTF-8 formatted text | no |

| Destination | The destination IP/hostname where this file was headed to when it was sniffed | Any UTF-8 formatted text | no |

- Example:

wireshark

Incident

The “Incident” Origin specifies that the file originated from a specific security incident.

| Subfield | Description | Format/Accepted Values | Required |

|---|---|---|---|

| Incident ID | The name or ID identifying the incident from which the file originated | Any UTF-8 formatted text | yes |

| Cover Term | An optional term for the organization where an incident occurred | Any UTF-8 formatted text | no |

| Mission Team | The name of the mission team that handled the incident | Any UTF-8 formatted text | no |

| Network | The name of the network where the incident occurred | Any UTF-8 formatted text | no |

| Machine | The IP or hostname of the machine where the incident occurred | Any UTF-8 formatted text | no |

| Location | The physical/geographical location where the incident occurred | Any UTF-8 formatted text | no |

Memory Dump

The “Memory Dump” Origin specifies that the file originated from a memory dump.

| Subfield | Description | Format/Accepted Values | Required |

|---|---|---|---|

| Memory Type | The type of memory dump this file originated from | Any UTF-8 formatted text | yes |

| Parent | The SHA256 of the memory dump file in Thorium from which this file originates | A valid SHA256 of an existing file in Thorium1 | no |

| Reconstructed | The characteristics that were reconstructed in this memory dump | One or more UTF-8 formatted strings | no |

| Base Address | The virtual address where the memory dump starts | An alphanumeric memory address | no |

- Your account must have access to the parent file in order to specify it in a file’s origin

Carved

The “Carved” Origin specifies that a file was “carved out” of another file (e.g. archive, memory dump, packet capture, etc.). Unlike “Unpacked,” “Carved” describes a sample that is a simple, discrete piece of another file. It’s extraction can be easily replicated without any dynamic unpacking process.

| Subfield | Description | Format/Accepted Values | Required |

|---|---|---|---|

| Parent | The SHA256 of the original file that was carved to produce this file | A valid SHA256 of an existing file in Thorium1 | yes |

| Tool | The tool that was used to produce this transformed file | Any UTF-8 formatted text | no |

| Carved Origin | The type of file this sample was carved from (and other related metadata) | See below Carved origin subtypes | no |

- Your account must have access to the parent file in order to specify it in a file’s origin

Carved origins may also have an optional subtype defining what type of file the sample was originally carved from. The Carved subtypes are described below:

PCAP

The “Carved PCAP” Origin specifies that a file was “carved out” of a network/packet capture.

| Subfield | Description | Format/Accepted Values | Required |

|---|---|---|---|

| Source IP | The source IP address this file came from | Any valid IPv4/IPv6 | no |

| Destination IP | The destination IP address this file was going to | Any valid IPv4/IPv6 | no |

| Source Port | The source port this file was sent from | Any valid port (16-bit unsigned integer) | no |

| Destination Port | The destination port this file was going to | Any valid port (16-bit unsigned integer) | no |

| Protocol | The protocol by which this file was sent | “UDP”/“Udp”/“udp” or “TCP”/“Tcp”/“tcp” | no |

| URL | The URL this file was sent from or to if it was sent using HTTP | Any UTF-8 formatted text | no |

Unknown

The “Carved Unknown” Origin specifies that a file was “carved out” of an unknown or unspecified file type.

This origin has no other subfields except for the ones from it’s parent “Carved” origin.

Web UI

You can upload files in the Web UI by following the steps shown in the following video:

Run Pipelines

You can choose to immediately run one or more pipelines on your uploaded file by selecting them in the Run Pipelines submenu.

You can also run pipelines on the file later from the file’s page in the Web UI or using Thorctl (see

Spawning Reactions for more info on running pipelines on files).

Thorctl

It is best to use Thorctl when you have a large number of files that you want to upload. Thorctl will eagerly upload

multiple files in parallel by default, and specifying a directory to upload will recursively upload every file within

the directory tree. To upload a file or a folder of files, you can use the following command (using --file-groups/-G

go specify the groups to upload to):

thorctl files upload --file-groups <group> <files/or/folders>

If you have multiple files or folders to upload (e.g. ./hello.txt, /bin/ls, and ~/Documents), you can upload them all

in one command like so:

thorctl files upload -G example-group ./hello.txt /bin/ls ~/Documents

Uploading to Multiple Groups

You can upload to more than one group by placing commas between each group:

thorctl files upload -G <group1>,<group2>,<group3> <file/or/folder>

Or by adding multiple -G or --file-groups flags:

thorctl files upload -G <group1> -G <group2> -G <group3> <file/or/folder>

Uploading with Tags

You can also upload a file with specific tags with the --file-tags or -T flag:

thorctl files upload --file-groups <group> --file-tags Dataset=Examples --file-tags Corn=good <file/or/folder>

Because tags can contain any symbol (including commas), you must specify each tag with its own -file-tags or -T flag rather

than delimiting them with commas.

Filtering Which Files to Upload

There may be cases where you want to upload only certain files within a folder. Thorctl provides the ability to either

inclusively or exclusively filter with regular expressions using the --filter and --skip flags, respectively.

For example, to upload only files with the .exe extension within a folder, you could run the following command:

thorctl files upload --file-groups example-group --filter .*\.exe ./my-folder

Or to upload everything within a folder except for files starting with temp-, you could run this command:

thorctl files upload --file-groups example-group --skip temp-.* ./my-folder

Supply multiple filters by specifying filter flags multiple times:

thorctl files upload --file-groups example-group --filter .*\.exe --filter .*evil.* --skip temp-.* ./my-folder

The filter and skip regular expressions must adhere to the format used by the Rust regex crate. Fortunately, this format is very similar to most other popular regex types and should be relatively familiar. A helpful site to build and test your regular expressions can be found here: https://rustexp.lpil.uk

Hidden Directories

Additionally, if you want to include hidden sub-directories/files in a target directory, use the --include-hidden flag:

thorctl files upload -G example-group ./files --include-hidden

Folder Tags

Thorctl also has a feature to use file subdirectories as tag values with customizable tag keys using the --folder-tags option.

For example, say you’re uploading a directory foo with the following structure:

foo

├── foo1.txt

└── bar

├── bar1.txt

├── bar2.txt

├── baz

└── baz1.txt

└── qux

└── qux1.txt

The foo directory contains five total files spread across three subdirectories. Each tag we provide with --folder-tags

corresponds to a directory from top to bottom (including the root foo directory). So for example, if you run:

thorctl files upload -G example-group foo --folder-tags alpha/beta/gamma

The key alpha would correspond to the foo directory, beta to bar, and gamma to baz and qux. So all

files in the foo directory including files in subdirectories would get the tag alpha=foo, all files in the

bar directory would get the tag beta=bar, and so on. Below is a summary of the files and the tags they

would have after running the above command:

| File | Tags |

|---|---|

| foo1.txt | alpha=foo |

| bar1.txt | alpha=foo, beta=bar |

| bar2.txt | alpha=foo, beta=bar |

| baz1.txt | alpha=foo, beta=bar, gamma=baz |

| qux1.txt | alpha=foo, beta=bar, gamma=qux |

A few things to note:

- Because

/is used to delimit individual folder tag keys, keys cannot have/in them. - Tags correspond to subdirectory levels, not individual subdirectories, meaning files in subdirectories on the same

level will get the same tag key (like

bazandquxabove). - You don’t have to provide the same number of tags as subdirectory levels. Any files in subdirectories deeper than the

number of folder tags will receive all of their parents’ tags until the provided tags are exhausted (e.g. if

quxhad a child directory calledquuxwith a file calledquux1.txt, it would get tags forfoo,bar, andqux, but not forquux). - To skip a directory level, you can simply provide an empty tag key for that level. For example,

--folder-tags a//con filea/b/c/d.txtwould get the tagsa=aandc=cbut no tag forb. - The root

/directory in absolute paths as well as.in relative paths are ignored, so--folder-tags a/bon/a/b/c.txt,./a/b/c.txt, and./a/./b/./c.txtwould all have tagsa=aandb=b. Note that..components (e.g.a/b/../b/c.txt) are not ignored.

Adjust Number of Parallel Uploads

By default, Thorctl can perform a maximum of 10 actions in parallel at any given time. In the case of file uploads, that means

a maximum of 10 files can be uploaded concurrently. You can adjust the number of parallel actions Thorctl will attempt to conduct

using the --workers/-w flag:

thorctl --workers 20 files upload --file-groups <group> <files/or/directories>

Viewing Files

Once you have uploaded files to Thorium, you will likely want to view them and any tool results. This can be done in either the Web UI or by using Thorctl.

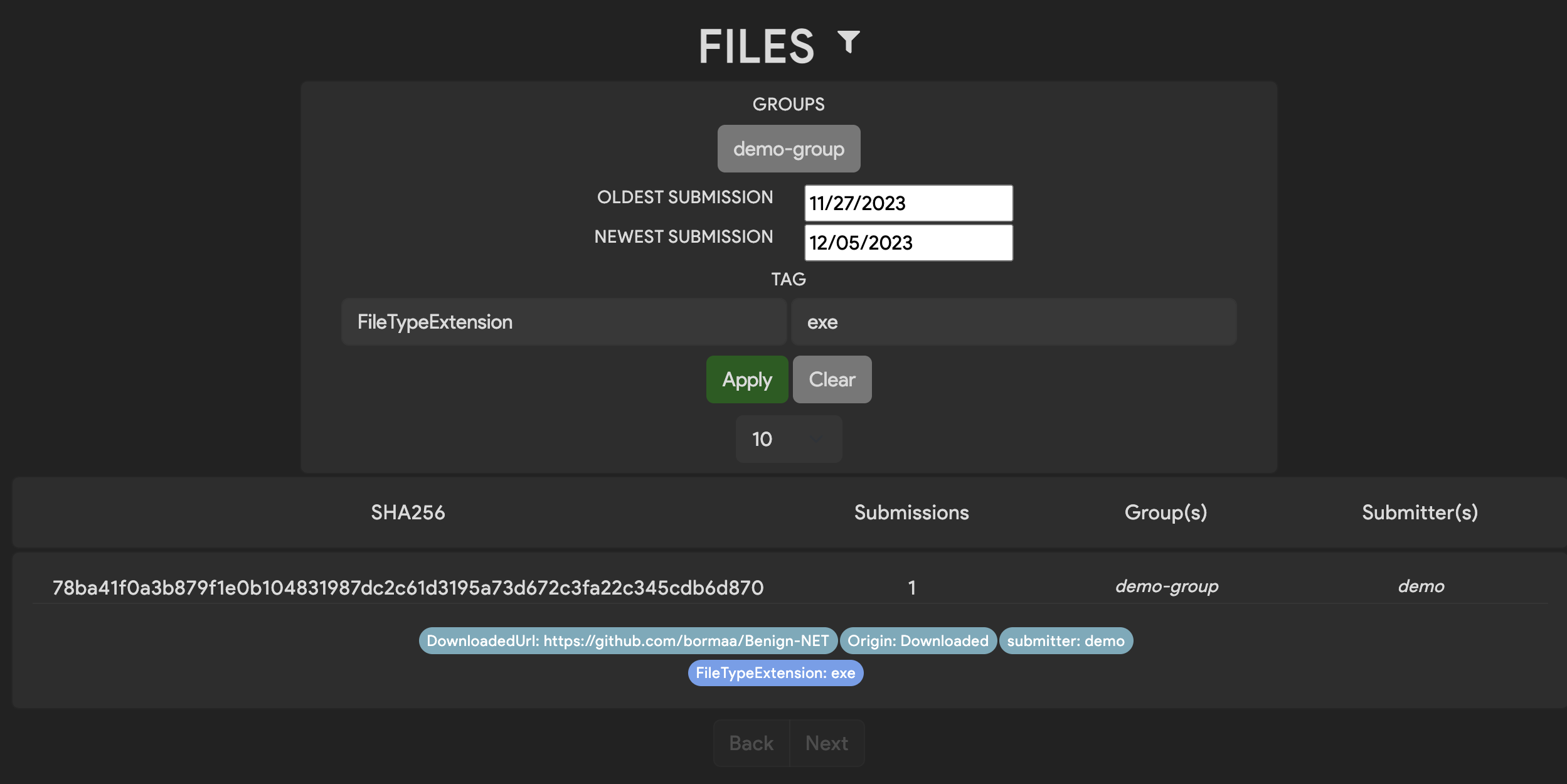

Web UI

You can find files by either going to the file browsing page as shown the video below or by searching using Thorium’s full text search which will be discussed more later. To view a files details simple click on it.

If you want to filter the files that are listed you can do that by changing the filter settings. Currently Thorium supports groups, submission date ranges, and a single key/value tag as filters. Tag key/values are case sensitive. Multi-tag filtering of files and tag normalization are both planned features that will improve file browsing.

You may notice that your files do not currently have many tags or results that can be used for filters. This is likely because we haven’t run many tools on these files. Running analysis tools on files will be covered in the spawning reactions section.

Thorctl

You can also view a list of files in Thorium using Thorctl. To do that that run the following command:

thorctl files get

The default maximum limit of files to be displayed is 50. You can change the limit by specifying the -l/--limit flag:

thorctl files get --limit 100

Filter by Group

Get a list of files belonging to a certain group or groups by adding the -g/--groups flag. Specify multiple groups by

separating each group with a comma:

thorctl files get --groups examples,shared

Filter by Tag

Get a list of files having certain tags by adding the -t/--tags flag. Unlike groups, tags must be specified each with

a separate flag, as the tags themselves may contain commas or other delimiting symbols:

thorctl files get --tags Dataset=Examples --tags Corn=good

Describe a File

Display all details of particular file in a JSON-like format by using the following command, supplying the file’s

SHA256 hash in place of the <SHA256> placeholder:

thorctl files describe <SHA256>

Tagging Files

Tags are metadata key/value pairs that are used to label the files and repositories that Thorium ingests. These labels make it easier for users and tools to find data that is stored in Thorium. It also helps users understand the data at a glance. Fields like file type, compiler, and origin info can all be tagged to better document the provenance of your data. Tags can be added when a file or repo is initially uploaded, ingested by the agent when a tool is run on that file, or added later by a Thorium user manually through the Web UI.

Tag values may contain any UTF-8 characters including symbols (e.g. Language=C++), characters from other languages (e.g. CountryOfOrigin=Україна), or even emojis (e.g. Status=✅).

Tags are currently case sensitive, but tag normalization (standardizing capitalization of existing tags in a Thorium instance) is a planned to be added in future versions of Thorium.

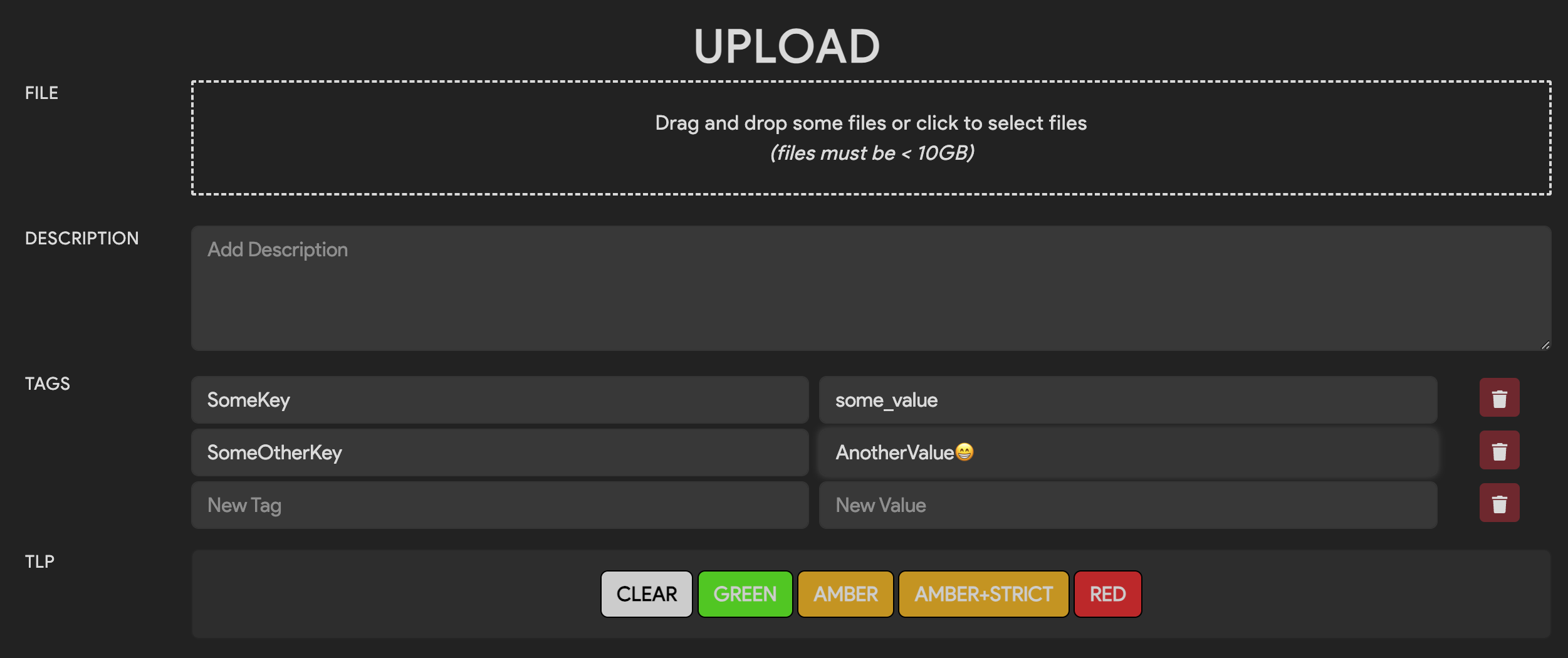

Tagging on Upload (Web UI)

You can specify tags when the file is uploaded via the Web UI. Once you have selected files to upload, enter in some key/value pairs in the tags section. Both a key and value are required to upload a given tag. Values for a tag cannot be blank/empty strings.

Tagging on Upload (Thorctl)

When using Thorctl to upload one or more file(s), you can apply key/value tag pairs with the -t key=value or

--tags key=value flag.

$ thorctl files upload --file-groups demo --tags TagKey=sometagvalue /path/to/upload

If you want to specify multiple tags to apply to each file you upload, supply the -t or --tags flag multiple

times:

$ thorctl files upload --file-groups demo --tags Key1=value1 --tags Key2=value2 /path/to/upload

Editing Tags (Web UI)

Tags can be added or removed after a sample has been uploaded via the Web UI.

Using the Web UI, navigate to the file details page and click the edit button right under the tags icon. Once you have clicked edit, you can add new tags or remove existing tags. When you are satisfied with your changes press the green save tag changes button and confirm you wish to commit those changes.

Editing Tags (Thorctl)

When using Thorctl to tag an existing file, use the tags command.

$ thorctl tags add --add-tags platform=MacOS --files 619dddbd681b593d8c6f66de50dd41e422bfac7a83feab6c3f0944de7c73a4d3

To add multiple tags at once, repeat the --add-tags option.

$ thorctl tags add --add-tags platform=MacOS --add-tags OS_Version=13.7.4 --files 619dddbd681b593d8c6f66de50dd41e422bfac7a83feab6c3f0944de7c73a4d3

To delete a tag, you must specify the complete name=value tag to remove.

$ thorctl tags delete --delete-tags "stripped=false" --files 619dddbd681b593d8c6f66de50dd41e422bfac7a83feab6c3f0944de7c73a4d3

Be aware that thorctl will report success for deleting a non-existent tag.

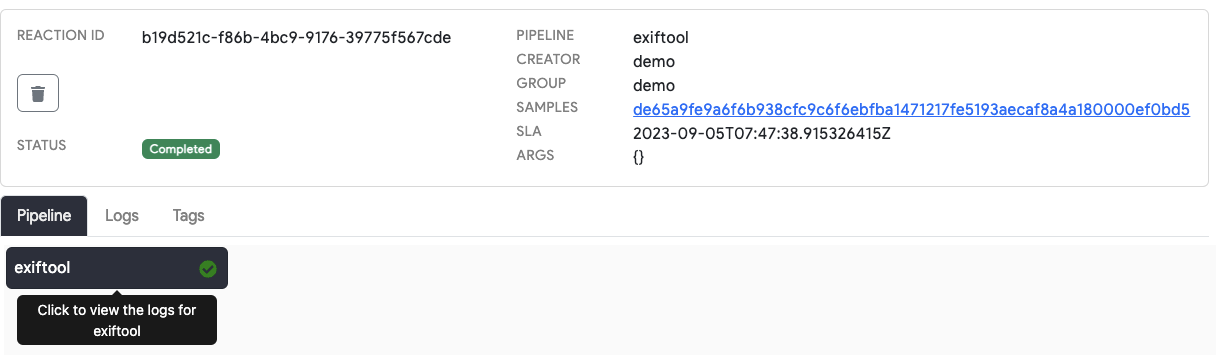

Spawning Reactions

In Thorium terminology, a reaction is a unit of work where one or more tools run on some data within a pipeline.

Thorium allows for many tools, called images, to be strung together into sequential or parallel stages of

a pipeline. The process for configuring images to run within Thorium and building pipelines is covered in detail

within the Developer chapters.

WebUI

The Web UI currently only allows users to spawn reactions for a single file at a time. If you wish to spawn reactions on many files, follow the Thorctl examples below. Once you have spawned a reaction, you can follow its progress and even view the stdout/stderr in the logs for that reaction stage. This allows you to easily troubleshoot tools if your analysis jobs fail to complete successfully.

Thorctl

Thorctl allows you to spawn reactions for a single file or many files at once. Use the following command to spawn a single reaction on a specific file using the file’s SHA256 hash:

thorctl reactions create --group <PIPELINE_GROUP> --pipeline <PIPELINE> <SHA256>

If you want to run a pipeline on files that have a specific tag or tags, add the -t/--tags flag and specify a tag in the format

KEY=VALUE as shown below:

thorctl reactions create --limit <LIMIT> --group <PIPELINE_GROUP> --pipeline <PIPELINE> --tags Datatset=Examples

To specify multiple tags, enter a -t/--tags flag for each tag:

thorctl reactions create --limit <LIMIT> --group <PIPELINE_GROUP> --pipeline <PIPELINE> --tags Tag1=Hello --tags Tag2=Goodbye

You can also watch the status of reactions using --watch or -W.

$ thorctl reactions create --group demo --pipeline test-pipeline --watch

CODE | PIPELINE | SAMPLES | ID | MESSAGE

-----+---------------------------+------------------------------------------------------------------+--------------------------------------+----------------------------------

200 | test-pipeline | 85622c435c5d605bc0a226fa05f94db7e030403bbad56e6b6933c6b0eda06ab5 | a0498ac4-42db-4fe0-884a-e28876ec3496 | -

-----+---------------------------+------------------------------------------------------------------+--------------------------------------+----------------------------------

WATCHING REACTIONS

STATUS | PIPELINE | ID

-------------+---------------------------+--------------------------------------

...

Thorctl Run

You can also quickly create a reaction, monitor its progress, and save its results to disk using the thorctl run command:

thorctl run <PIPELINE> <SHA256>

Unlike thorctl reactions create, thorctl run will display the stdout/stderr output of each stage in real time and

automatically save the results to disk, effectively emulating running the reaction locally on your machine. This might be

preferable to thorctl reactions create for running a quick, one-off reaction.

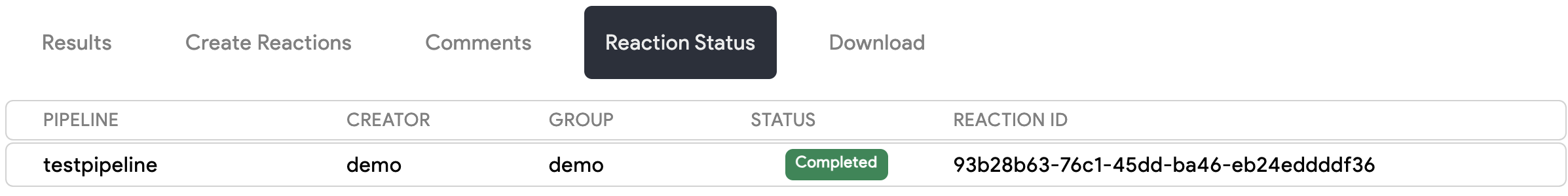

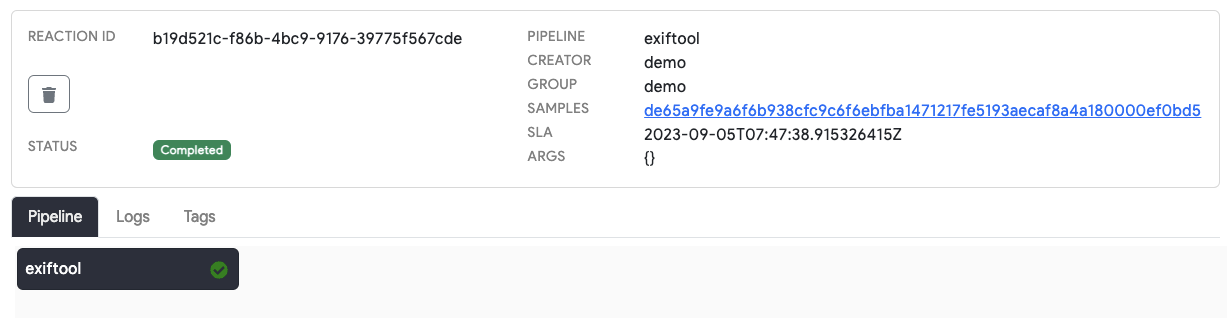

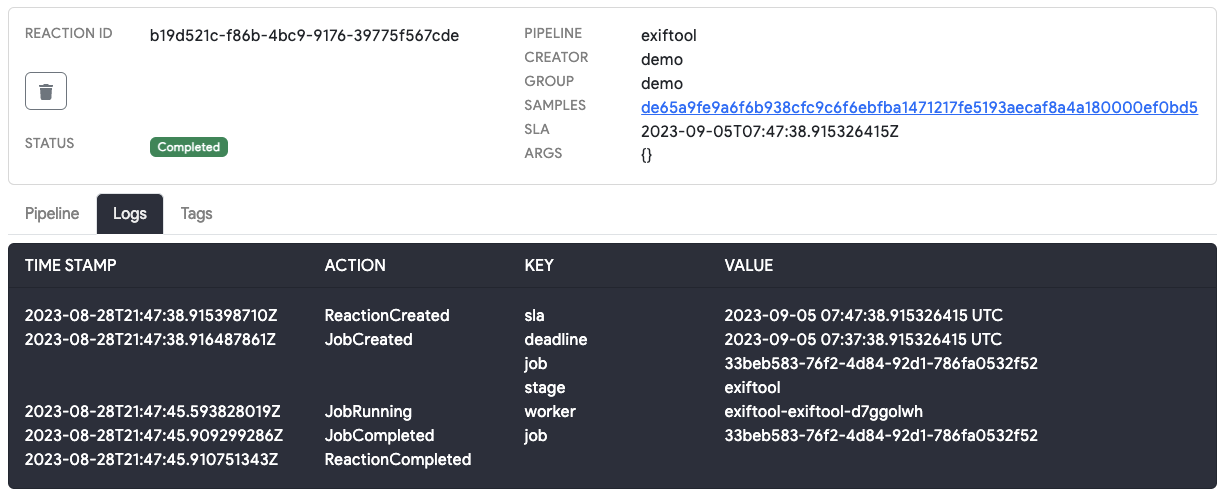

Reaction Status

The status of a reaction can be used for monitoring the progress of the analysis jobs you create. You can view the

status of reactions on the file details page through the Web UI or using the -W flag when submitting reactions using

Thorctl.

After a reaction has been submitted, its initial status is Created. Reactions that have been scheduled by the Thorium

Scaler and executed by an Agent process will enter the Running state. These reactions will run until either the

tool completes successfully, returns an error code, or is terminated by Thorium for exceeding its runtime specification

(resources limits or max runtime). All failure states will cause the reaction to enter the Failed state. Successful

runs of all images within the pipeline will cause the reaction to be marked as Completed.

| Status | Definition |

|---|---|

| Created | The reaction has been created but is not yet running. |

| Running | At least one stage of the reaction has started. |

| Completed | This reaction has completed successfully. |

| Failed | The reaction has failed due to an error. |

Reaction Lifetimes

Once a reaction has reached its terminal state (Completed or Failed), the reaction status and logs will see no

future updates. Thorium applies a lifespan of 2 weeks for reactions that have reached a terminal state. After this

lifespan has been reached, Thorium will cleanup info about the expired reaction. This cleanup does not delete tool

results and only affects reaction metadata such as the reaction’s status and logs. This helps to prevent infinite

growth of Thorium’s high consistency in-memory database, Redis. Because of this cleanup, users may not see any

Reactions listed in the Reaction Status section of the Web UI file details page even when tool results are visible.

Search

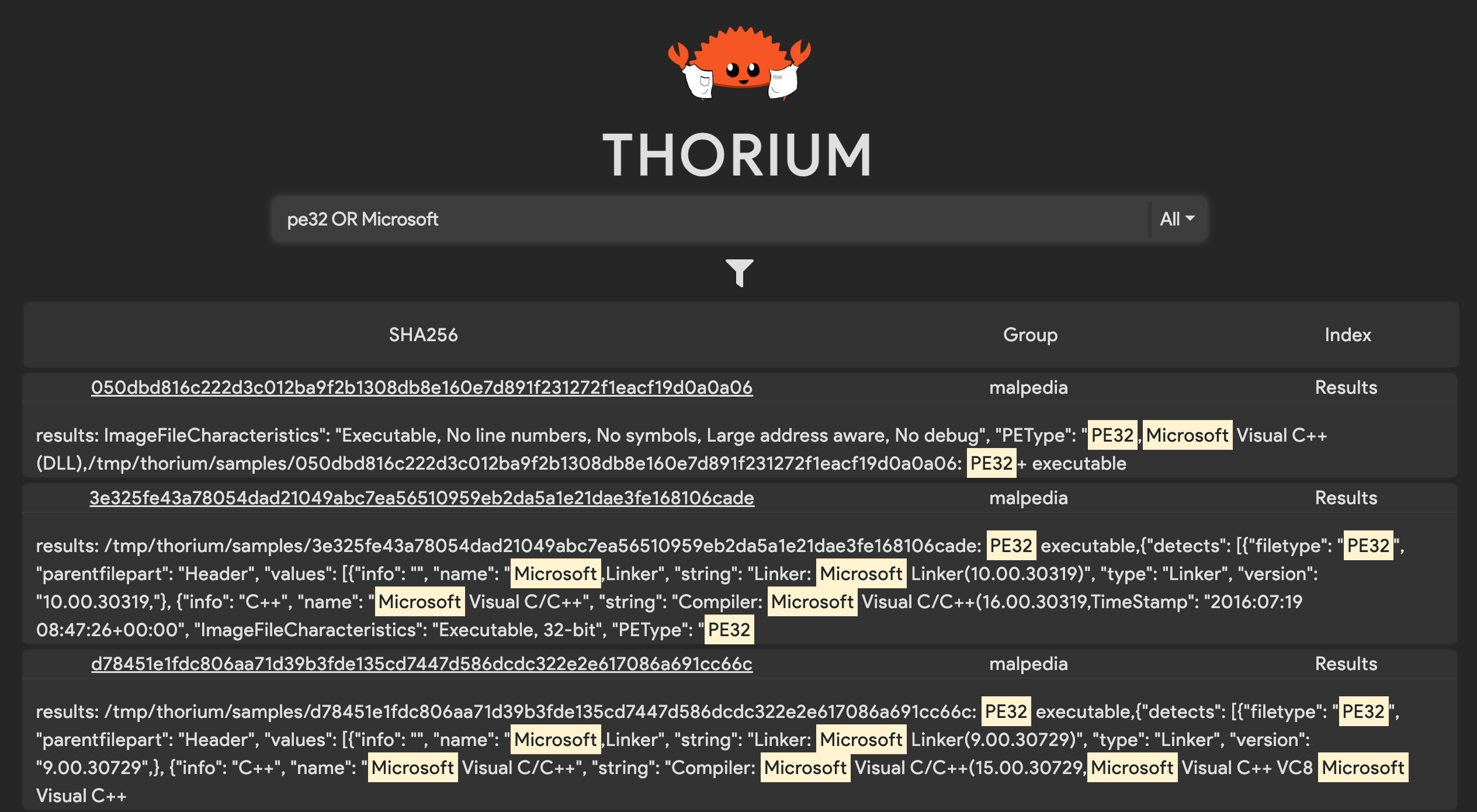

Thorium also allows users to search through tool results and file tags to find interesting files. This is currently only available in the Web UI and can be accessed on the home page. Thorium uses the Lucene syntax for search queries.

It’s important to note that documents are indexed per group. This means that for a document to be returned, all search parametes must be met by at least one group (see the below FAQ for more details).

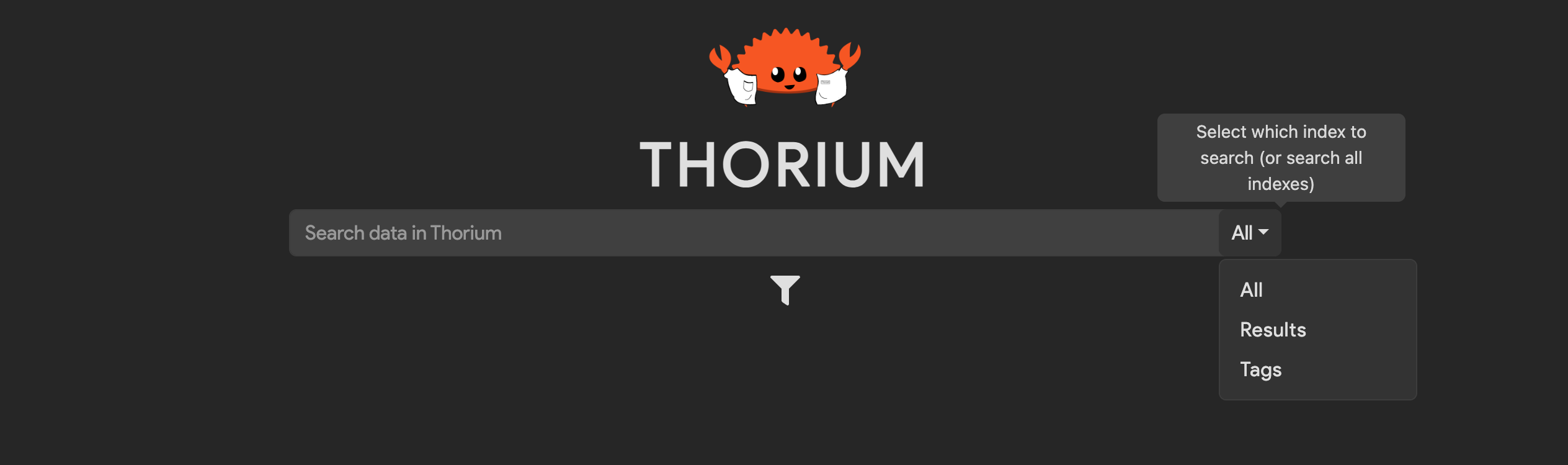

Indexes

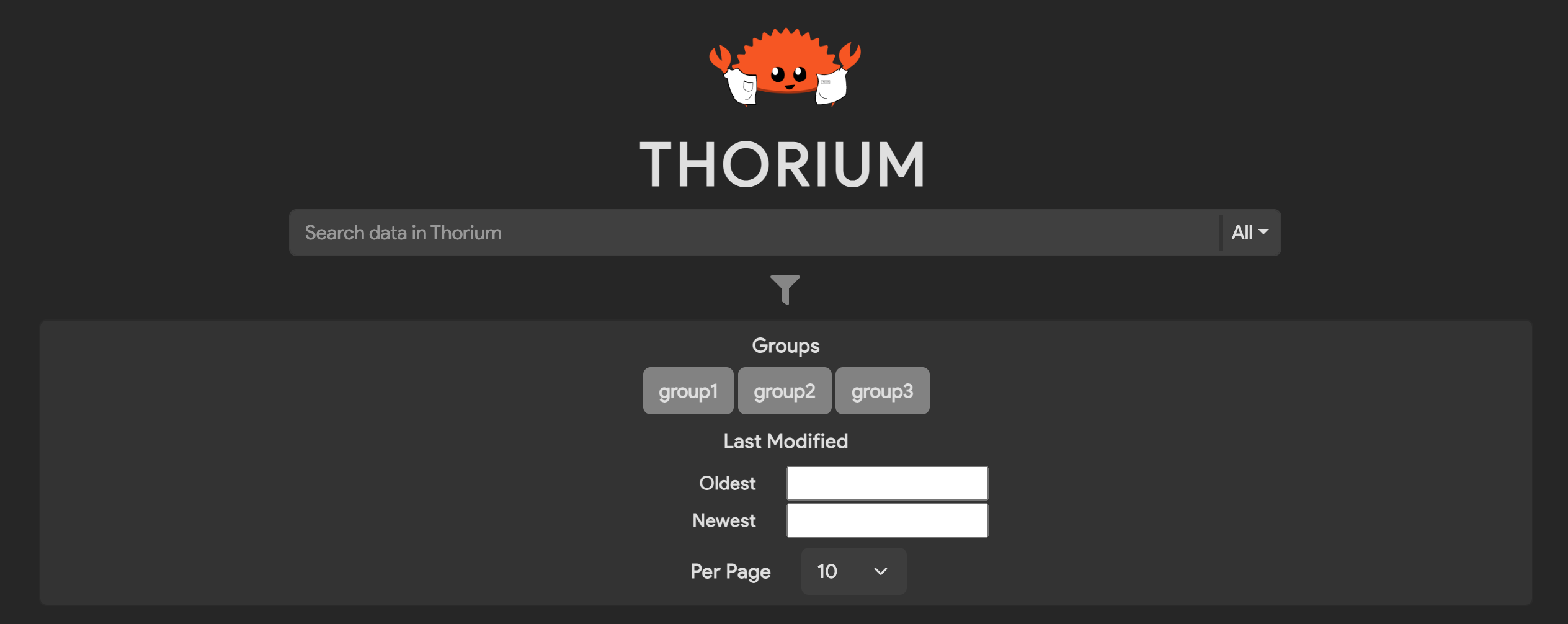

Data is stored in Elasticsearch in various indexes based on the data type (i.e. results are stored separately from tags). You can select the index to search on by selecting the dropdown on the right of the search bar. Searches are performed on all indexes by default.

Search Parameters

You can specify various parameters for your search by clicking the funnel icon below the search bar. Parameters include the sample’s group(s), the date the sample was last modified, and the number of search results to display per page. By default, no groups are selected and searches are performed on all of the user’s groups.

Examples

The following are examples of possibl search queries using Lucene syntax.

Querying for tags/results containing the text pe32:

pe32

Querying for tags/results containing pe32 or Microsoft:

pe32 OR Microsoft

Querying for tags/results containing rust and x86_64:

rust AND x86_64

Querying for tags/results containing the string rust and x86_64. Use quotes to

wrap search queries that contain white space or conditional keywords:

"rust and x86_64"

Querying for tags/results containing the string rust and x86_64 and pe32:

"rust and x86_64" AND pe32

Querying for tags/results containing pe32 or string rust and x86_64 and pe32:

pe32 OR ("rust and x86_64" AND pe32)

All UTF-8 text is supported:

✨Some 🤪 UTF-8 😁 text!!!✨

Results

Querying for results where a field named PEType is set to "PE32+"

"PEType:\"PE32+\""

Tags

Note: Tags are stored in Elasticsearch with

=delimiting the key and value. That means that the most effective way for searching for whole tags is by using=. Using other delimiters (e.g.:) may cause otherwise matching tags to not appear.

Querying for the tag arch=x86_64:

arch=x86_64

Querying for tags exactly matching arch=x86_64 (use quotes):

"arch=x86_64"

Querying for files with either the tag arch=x86_64 or arch=arm64:

arch=x86_64 OR arch=x86_64

Querying for files with both tags Lang=Rust and arch=x86_64:

Lang=Rust AND arch=x86_64

If tags contain Lucene keywords (e.g. AND, OR, etc.), use quotes:

"Tag With Spaces=Keywords AND OR" AND "Tag=Value"

FAQ

Why does it take some time for data to become searchable?

It can take some time (usually < 10 seconds) for results to be searchable in

Thorium because they are indexed asynchronusly by a separate component called

the Thorium search-streamer. The time it takes for the search-streamer

to index data depends on how much data has been added/modified/deleted in

Thorium recently.

What do groups have to do with searching in Thorium?

Due to Thorium’s permissioning requirements and how Elastic operates, a file will have a separate document in Elastic for each group containing results/tags visible in that group. That means that for a file to appear in the search results, all of the given search parameters must for at least one group.

For example, let’s say we have one sample with a placeholder SHA256 of my-sha256. This

sample is in two groups, GroupA and GroupB. The file has tags in both groups, but

not all of them are shared between groups. Our data would look something like this:

[

{

"sha256": "my-sha256",

"group": "GroupA",

"tags": [

"Corn": "IsGood",

"HasTaste": "true",

"Fliffy": "IsAGoodDog"

]

},

{

"sha256": "my-sha256",

"group": "GroupB",

"tags": [

"Corn": "IsBad",

"HasTaste": "false",

"Fliffy": "IsAGoodDog"

]

}

]

So the following query would return the sample from both groups because both of the groups have the given tag:

Query: "Fliffy=IsAGoodDog"

Results:

"my-sha256", "GroupA"

"my-sha256", "GroupB"

But this query would return no samples because neither of the groups have both of the given tags:

Query: "Corn=IsBad AND HasTast=true"

Results:

Viewing Results

Tool results are created when a pipeline is run on a target file. The running pipeline instance is called a

reaction and may involve running several tools (called images) on the target file. The analysis artifacts created

by each tool are then automatically stored in Thorium after each pipeline stage has completed. We organize tool

results based on the name of the tool/image rather than the name of the pipeline where that tool was run.

Tools may generate several types of result output, including renderable and downloadable formats. These artifacts include:

- results: renderable data including basic text and JSON formatted tool output

- result-files: downloadable files produced by the tool and any tool results larger than 1MB

- children: unpacked or transformed files that Thorium treats like sample files due to potential maliciousness.

You can view or download results and child files from both the Web UI and Thorctl.

Web UI

Results and Result Files

You can navigate to the details page for a file using the sha256 hash of that file, or by browsing and searching

through Thorium’s data. If you are already on the file details page and see your reaction’s have completed, refresh the

page to get the latest tool results!

Once you load the file details page, click the Results tab that’s displayed after the submission info section. You

should see tool results that you can scroll through as shown in the video below.

You can also jump to results by clicking on the tools corresponding tag for a particular tool result.

Tools can create renderable results as well as result files. If a tool produces a result files, those files can be downloaded using the links at the bottom of the result section for that tool.

The number of result files that a tool produced will be displayed on the header of the results section. That file count badge can be clicked to jump to the result files links.

Children Files

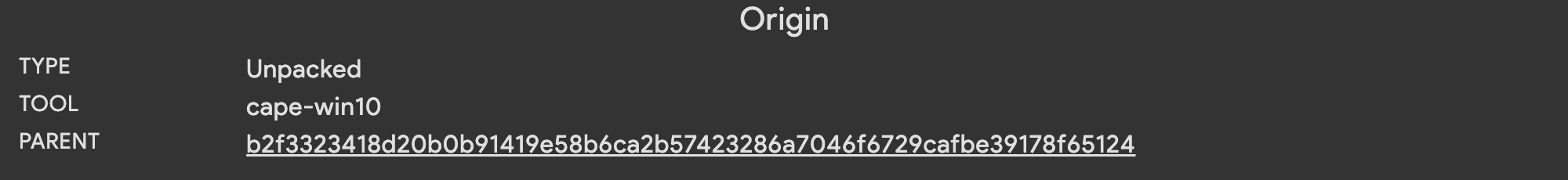

Many tools will produce entirely new samples called children files that are saved in Thorium after the tool exits.

For example, an unpacking tool might remove protective/obfuscating layers of a given malware sample in order to unpack

the core payload and save it as a new sample in Thorium for further analysis. The sample that a tool was run on to

produce a child file its parent file. The origin information on a child file’s details page contains a convenient link

to the child’s parent. Clicking the link will take you to the sample details of the parent file.

Thorctl

You can download results for specific samples using Thorctl with the following command:

thorctl results get <SHA256>

Download results for multiple samples by passing multable file SHA’s:

thorctl results get <SHA256-1> <SHA256-2> <SHA256-3>

If you want to download results for specific tools then you can use the following command:

thorctl results get --tools <TOOL> --tools <TOOL> <SHA256>

You can also get results for any samples with a certain tag with the following command:

thorctl results get --tags Dataset=Examples

The tool and tag flags can be set together to get the results of running a tool on samples with a particular characteristic:

thorctl results get --tools analyzer --tags Packed=True

The number of results from which Thorctl downloads files is limited to prevent inadvertent massive download requests.

To change the limit, use the --limit/-l flag:

thorctl results get --tags Incident=10001234 --limit 100

Downloading Files

If you need to download a file to carry out further manual analysis steps, you can do so via the Web UI or Thorctl. Because samples stored in Thorium are often malicious, they are downloaded from Thorium in a non-executable state, either in a safe CaRTed format or as encrypted ZIP files. This means that before a downloaded file can be analyzed, it must be either be unCaRTed or decrypted/extracted from the ZIP archive to return it to its original, potentially executable, state. If you are working with malicious or potentially malicious files, only unCaRT them in a safe location such as a firewalled virtual machine. Keep in mind that most anti-virus applications will immediately detect and quarantine known malware after extraction, so disabling anti-virus applications entirely may be necessary to effectively extract the sample. Be careful when dealing with extracted malware samples!

Cart vs Encrypted Zip

Thorium supports two different download types each with its own pros and cons:

| Capability | CaRT | Encrypted Zip |

|---|---|---|

| Encrypted | ✅ | ✅ |

| Compressed | ✅ | ✅ |

| Streaming Extraction | ✅ | ❌ |

| API Load | low | high |

| Native Windows/Linux/Mac Support | ❌ | ✅ |

At a high level encrypted zips are more user friendly but are less performant and cause a high load on the API. When peforming actions at scale or working with large files using CaRT is highly recommended.

Web UI Sample File Download

You can choose between CaRTed and encrypted ZIP format when downloading files using the Thorium Web UI. If the file is in the CaRTed format You will need to use a tool such as Thorctl to unCaRT the file after it has been downloaded and moved into a sandboxed environment.

Thorctl File Download

Alternatively, you may use Thorctl to download the file on the command line in either a CaRTed or unCaRTed format. You can download a single file by its sha256 hash using the following Thorctl command:

thorctl files download <sha256>

Thorctl’s current behavior is to download the file in a CaRTed format by default. Downloading files as encrypted ZIP’s is not

currently supported in Thorctl. If you want to immediately unCaRT the file, you can use the -u or --uncarted flag.

thorctl files download --uncarted <sha256>

If you want to download the file to a different path, that is not in the current working directory, you can use the

-o/--output flag.

thorctl files download --output /path/to/download/directory <sha256>

You can also download multiple files by specifying a metadata tag that the downloaded files must have and the -l/--limit flag

to specify how many files you would like to download.

thorctl files download --carted --limit 100 --tags Incident=10001234

If you do not specify a limit count when you provide a key/value tag, Thorctl will default to downloading a maximum of 10 files.

CaRTing/UnCaRTing Files

Thorctl also has the ability to CaRT and unCaRT local files. This is particularly helpful if you want to download a file in a CaRTed format and then unCaRT it in a quarantined location later or CaRT files to store after analysis is complete.

CaRTing Files

To CaRT a file, simply run:

thorctl cart <path-to-file>

You can also CaRT multiple files in one command:

thorctl cart <path-to-file1> <path-to-file2> <path-to-file3>

Specifying an Output Directory

CaRTing with Thorctl will create a directory called “carted” in your current directory containing the CaRTed files

with the .cart extension. To specify an output directory to save the CaRTed files to, use the -o or --output flag:

thorctl cart --output ./files/my-carted-files <path-to-file>

CaRTing In-Place

You can also CaRT the files in-place, replacing the original files with the new CaRTed files, by using the

--in-place flag:

thorctl cart --in-place <path-to-file>

CaRTing Directories

Giving the path of a directory to CaRT will recursively CaRT every file within the directory.

thorctl cart <path-to-dir>

Because CaRTed files will be saved together in one output folder, collisions can occur if files have the same name

within a directory structure. For example, let’s say I have a directory called my-dir with the following structure:

my-dir

├── dir1

│ └── malware.exe

└── dir2

└── malware.exe

Because Thorctl will recursively CaRT all files within my-dir and save them in one output directory, one

malware.exe.cart will overwrite the other. To avoid such collisions, you can either use the aforementioned

--in-place flag to CaRT the files in-place or use the -D or --preserve-dir-structure flag to output files in a

structure identical to the input directory. So CaRTing my-dir with the above structure using the

--preserve-dir-structure option would yield the output directory carted, having the following structure:

carted

└── my-dir

├── dir1

│ └── malware.exe.cart

└── dir2

└── malware.exe.cart

Filtering Which Files to CaRT

There may be cases where you want to CaRT only certain files within a folder. Thorctl provides the ability to either

inclusively or exclusively filter with regular expressions using the --filter and --skip flags, respectively.

For example, to CaRT only files with the .exe extension within a directory, you could run the following command:

thorctl files cart --filter .*\.exe ./my-dir

Or to CaRT everything within a directory except for files starting with temp-, you could run this command:

thorctl files cart --skip temp-.* ./my-dir

Supply multiple filters by specifying filter flags multiple times:

thorctl files cart --filter .*\.exe --filter .*evil.* --skip temp-.* ./my-dir

The filter and skip regular expressions must adhere to the format used by the Rust regex crate. Fortunately, this format is very similar to most other popular regex types and should be relatively familiar. A helpful site to build and test your regular expressions can be found here: https://rustexp.lpil.uk

UnCaRTing Files

UnCaRTing in Thorctl looks very similar to CaRTing as explained above but uses the uncart command instead:

thorctl uncart <path-to-CaRT-file>

You can specify multiple CaRT files, unCaRT in-place, preserve the input directory structure, and apply filename

filters just as with the cart command. For example:

thorctl uncart --filter .*\.cart --skip temp-.* --output ./my-output --preserve-dir-structure ./my-carts hello.cart

Commenting on Files

Commenting on files is a great way to share your progress and insights from analyzing a file. Anyone that is a member of at least one of your groups that has access to the file will be able to view your comment. You can leave a comment on a file through the Web UI by following the steps shown in the video below.

Comment Attachments

You can also upload files as attachments to your comments. Unlike files/samples, comment attachments are not stored in the safe CaRTed format. Only submit benign data as a comment attachment.

If you need to submit a manually modified version of

a sample, you can do so by uploading the modified sample with an Origin of Unpacked or Transformed and with the

Parent value set to the SHA256 of the original file.

Revoking Your Token

If for some reason you need to revoke your Thorium token, you can do so via the profile page in the Web UI. When you click the revoke button you will see a warning:

Revoking your token will automatically log you out of this page and any currently running or queued analysis jobs (reactions) may fail. Are you sure?Reactions run as your user and with your user’s Thorium token. As a result, revoking your token will cause any currently

Runningreactions to fail. This includes reactions in theRunningstate or reactions in theCreatedstate that start to run before the revocation process completes. You can always resubmit reactions that fail after you have revoked your token.If you are sure you want to revoke your token, click confirm. After the token has been revoked, you will be logged out of your user session and redirected to the login page.

Developers

Thorium developers have all the abilities of someone with the User system role, but have the added ability to create

and modify analysis tools (called “images”) and build pipelines from those tools. Just like a Thorium user, developers

can:

- upload files and Git repositories

- add and remove metadata tags on uploaded files and repositories

- run a pipeline on a file or repository (called a reaction)

- view reaction status and logs

- view tool results

- comment on files and upload comment attachments

- create new groups

Additionally, developers can:

- Create, modify, and delete images and pipelines.

A developer must have adequate group permissions (via their group role) to create, modify or delete an image/pipeline

within a group. They must be an Owner, Manager or User within the group to create resources in that group.

The Monitor role grants view-only permissions and does not allow the group member to create, modify or delete group

resources.

What Are Images?

In Thorium a tool is called an image. Images are a combination of a command line (CLI) tool and all the configuration information needed to run that tool in one of Thorium’s execution environments. Before you can add or modify an image, you must have the developer role. If you don’t yet have that role, you may request it from your local Thorium admins. Once you have the developer role, you can learn about the process for adding new images and how to correctly configure images.

Creating/Adding A New Image

To add a new image, you must tell Thorium how to run your tool via the image’s configuration settings. This runtime configuration may seem complicated, but has been designed to minimize or eliminate the need to customize your tool to work within Thorium. You tell Thorium how to run your tool and where your tool writes its outputs/results and Thorium can then handle executing your image within an analysis pipeline. Your tool does not need to know how to communicate with the Thorium API. Because of this functionality, any command line (CLI) tool that can run in a container or on bare metal can be added as a new image without any customization.

You may add a new image using the Web UI as shown in the following video. Adding images is not currently supported via Thorctl.

If you want to know more about the available image configuration options, you can go to the next section that explains how to configure an images. This section covers the required image configuration settings as well as the more advanced optional settings.

Configuring Images

This section explains each configurable image field. This information is critical to getting your image to run in Thorium. so please read each field description before attempting to add a new image. Images with incorrect configurations may fail when run or never be run at all.

Name

(Required)

Your image needs a name. Names must be unique within a group and can only consist of lower case alpha-numeric characters and dashes.

Group

(Required)

Assign your image to a group. An image may only be in a single group, although you can easily copy your image to different groups using the Web UI. Images are just a configuration so there is no waste in having duplicates.

Description

(Optional)

A basic text description of what this tool does and what analysis artifacts it may produce. It will be displayed in the image details, so please help users of your tool by providing a thorough description..

Scaler

(Required)

The Thorium scheduler that will be responsible for running this image. For containerized tools that execute static

analysis on files or repos, select K8s. If your tool must be run on bare metal hardware or does dynamic analysis,

please contact an admin for help with the setup of a BareMetal image.

| Scheduler | Description | Admin Setup Help Required |

|---|---|---|

| K8s | Scheduled by the Thorium Kubernetes scheduler, k8s scheduled tools are run in containers. | No |

| BareMetal | Scheduled by the Thorium BareMetal scheduler, BareMetal tools runs directly on a server outside of a container or VM. | Yes |

| External | Scheduling of external jobs is not handled by Thorium, external tools must interact with the API to get jobs and update job status. | No |

Image/Tag

(Required for K8s scheduled images)

For containerized tools that use the K8s scheduler, enter the image url:port/path:tag for the registry image. For

example, an image pulled from hub.docker.com would enter ubuntu:latest since its a default registry. A different

registry would use the registries URL and port to tell Thorium where to go to grab the container image.

registry.domain:5000/registry/path:v1.0

If the registry you specify requires authentication, you will need to provide a registry token for Thorium to pull your image before it will run. Please reach out to your local Thorium admins to provide that registry token.

Version

(Optional)

The version of the underlying tool that is executed when this image is run. Ideally the version you provide should formatted using semantic versioning, however this is not a requirement.

Timeout

(Required)

The maximum time an image will be allowed to run in seconds. A running image will be killed after this time limit has been reached.

Display Type

(Required)

If this tool produces any results, this configures what format are those results take. This setting is used by the Web UI to display any renderable tool results on the file/repo details page. For images that do not return results, select any value.

| Type | Description |

|---|---|

| Json | For a results file that is valid JSON, an interactive JSON renderer will be displayed. Url links to children files and downloadable result_files links will be displayed. |

| String | Plain text results get rendered along with links to children files and downloadable result_files links. |

| Image | Render any result_files images as well as any text output from the results file. Links to children files and downloadable result_files links are also displayed. Result_files must contain a valid image extension to be rendered in the Web UI. |

| Table | Display tool results as a two column table. Links to children files and downloadable result_files links are also displayed. |

| Disassembly | Display tool results as disassembled code from binary. The renderer will apply syntax highlighting if it recognizes the language of the file. |

| Hidden | Do not render results produced by this tool. Results will be hidden from users in the Web UI. |

| Custom | Custom renderers can be built for specific tools and added by Thorium’s Web UI developers. |

Spawn Limit

(Optional, defaults to Unlimited)

The max number of running images of this type that the Thorium scaler will attempt to spawn.

Collect Logs

(Optional, defaults true)

Boolean value on whether Thorium will collect stdout and stderr as logs from this image. Reaction logs can be viewed during and after a reaction runs and are useful for troubleshooting broken tools.

Generator

(Optional, defaults false)

Boolean value on whether this image will require Thorium to respawn it after it sleeps. This is useful for building long running tools that must checkpoint/sleep and then subsequently be respawned. This is an advanced feature that most tools/developers will ignore.

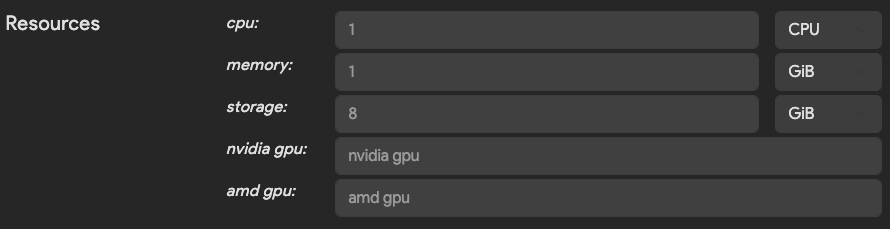

Resources

(Optional, defaults are set for CPU/Memory only)

The resources section tells Thorium what compute, memory, and storage resources your tool needs to successfully run. Values for CPUs, memory, ephemeral storage, and GPUs may all be set.

You should set these values to the largest expected value your tool will need to run. If your tool’s peak memory usage is 16GB, select at least that amount for the image’s memory resource configuration. If you set a value that is too low for memory or CPU usage, your tool may run slowly and/or be killed for using more resources than this configuration allows. On the other hand, selecting values that are too high for any resource may limit or prevent Thorium from scheduling your tool to run. Thorium can only schedule an image to run on systems where there are resources available to meet the requested resource values for that image.

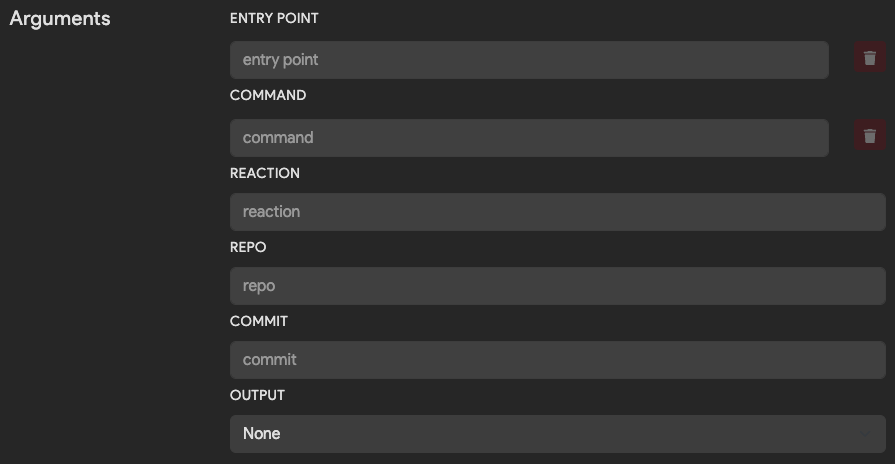

Arguments

(Optional)

Arguments define how Thorium will trigger the execution of your tool. Command line arguments for a tool are built based on this configuration.

The following table explains what each optional argument does and when to specify it.

| Argument | Description | Example |

|---|---|---|

| Entrypoint | The initial executable to run. | /path/to/python3 |

| Command | List of parameters to pass to the Entrypoint executable, one value per form field. | /path/to/script.py, --flag, some_value |

| Reaction | Flag to pass in UUID of the running Thorium reaction. Only specify if the tool needs a unique identifier. | --reaction |

| Repo | Flag to pass in repo name if a repo is being analyzed. | --repo |

| Commit | Flag to pass in commit hash if a repo is being analyzed. | --commit |

| Output | How to tell the tool where to place tool results/outputs. | Append to place the output path at the end of the command line args or use Kwargs and specify a flag to pass in the path such as --output |

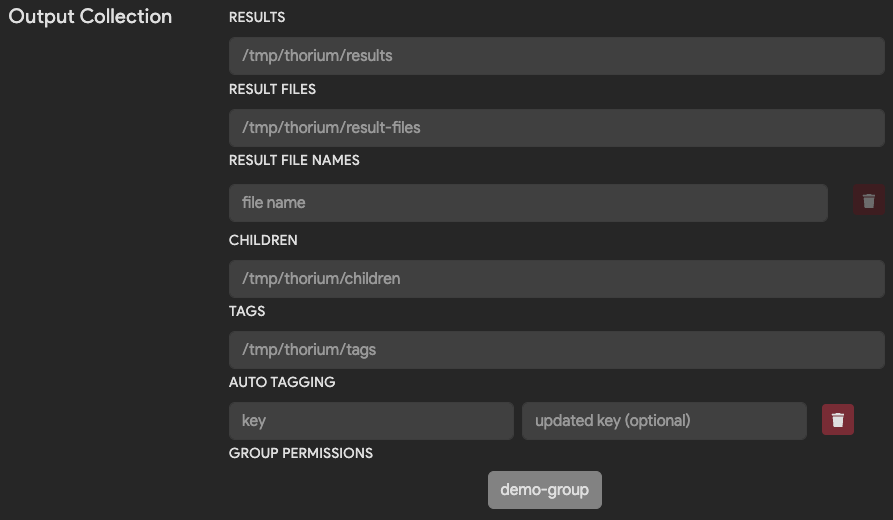

Output Collection

(Optional)

After a tool has run, the Thorium agent will collect tool results, children files, and metadata tags. The Thorium agent, will then upload these artifacts to the API so they are accessible within the Web UI or using Thorctl. The output collection settings tell the agent how to handle these artifacts.

The following table explains how to configure the agent to handle your tools outputs.

| Field | Description | Default |

|---|---|---|

| Results | Path to a renderable result file. The contents of the file will be displayed in the Web UI based on the display_type setting. | /tmp/thorium/results |

| Result Files Path | Path to a directory of result files to upload. Result files will be displayed as downloadable links in the Web UI. | /tmp/thorium/result-files |

| Result File Names | Names of specific result files to upload. If specified all other files will be ignored. | None |

| Children | Path to a directory of children files produced by running the tool. Within this directory, children must be placed into a subdirectory with the type of child as its name: unpacked or source. Children files are automatically CaRTed upon upload to Thorium. | /tmp/thorium/children |

| Tags | Path to a JSON dictionary of key/value pairs to upload as tags. This file must be valid JSON. | /tmp/thorium/tags |

| Group Permissions | Groups to which results and tags are uploaded. By default tool results are upload to all your groups that have access to the target file/repo. Use this when you are working with potentially sensitive tools. | None |

When a tool’s analysis result is a valid JSON dictionary, the agent can automatically pull key/value tags and upload

them to the file or repo that the tool ran against. The following table explains how to configure Auto Tagging.

| Field | Description |

|---|---|

| Key | The matched key in the tools JSON result dictionary. The key must be at the root level of the dictionary. |

| New Key/Updated Key | The renamed string that will get uploaded as the new tag’s key. |

Child Filters

(Optional)

Child filters allow users to specify regular expressions to match on children files before submitting them. This is especially helpful working with a tool that outputs many files to its configured children directories with no easy way to filter them in the tool itself.

By default, children that match at least one filter will be submitted. If Submit Non-Matches is set, only

children that don’t match any of the given filters will be submitted. If no child filters are provided,

all children will always be submitted.

All filters must be valid regular expressions parseable by the Rust regex crate. If an invalid regular expression is provided, Thorium will return an error.

MIME Filters

MIME filters match on the MIME type of a file according to its magic number. Supported MIME types can be found

here.

For example, if you want to match only image MIME types, you can provide the filter image.*.

File Name Filters

File name filters match on children’s file names, including their extension. For example, if you want to submit

only children starting with lib, you can provide the filter lib.*.

File Extension Filters

File extension filters match on children’s file extensions without the leading . (exe, txt, so, etc.).

For example, if you want to submit only exe files, you can provide the filter exe.

Dependencies

(Optional)

Any samples, repos, tool results, or ephemeral files that your tool needs to run will be configured here. These configuration options help Thorium tell your tool where to find the tool’s dependencies that are automatically downloaded by the Agent before your tool is executed.

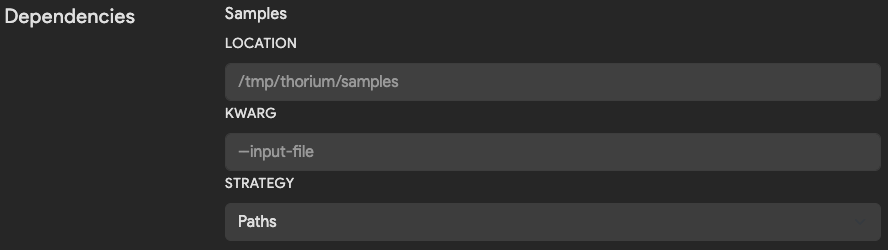

Samples

The Samples settings are relevant for tools used to analyze Thorium files. Since all files in Thorium are stored

in a CaRTed format, the agent handles the download and unCaRTing of those files before executing your tool. You may

use the sample dependency settings to change the default download path and how Thorium tells your tool where to find

the downloaded file(s).

| Field | Description | Default |

|---|---|---|

| Location | Directory path to download files into. Downloaded files are named based on their sha256 hash. | /tmp/thorium/samples |

| Kwarg | Flag used to pass in name or path to file. If blank, positional args are used to pass in value. | None |

| Strategy | Whether to pass in path or name to the kwarg. Options are Path, Names or Disabled when no argument should be passed. | Path |

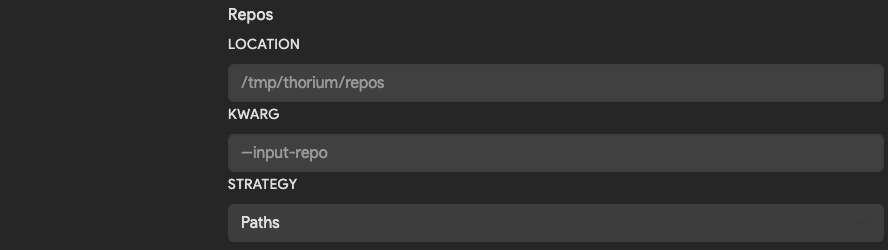

Repos

If a tool takes a Git repository as an input, this section can be configured to change the default location the Agent will download that repo into. Since all repos in Thorium are CaRTed upon upload, the agent will download and automatically unCaRT downloaded repos.

| Field | Description | Default |

|---|---|---|

| Location | Directory path to download repos into. | /tmp/thorium/repos |

| Kwarg | Flag used to pass in name or path to a repo. If blank, positional args are used to pass in value. | None |

| Strategy | Whether to pass in path or name to the kwarg. Options are Path, Names or Disabled when no argument should be passed. | Path |

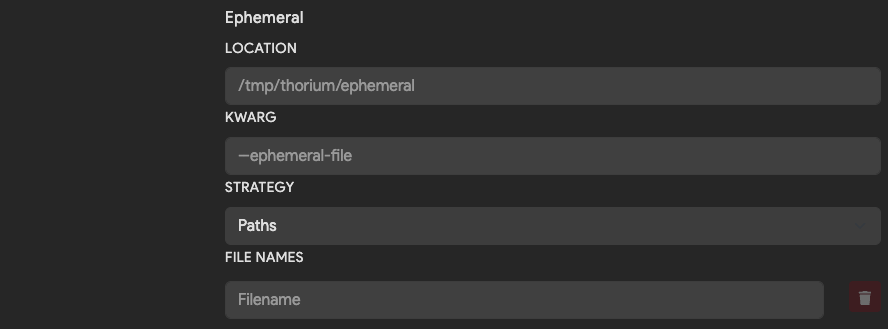

Ephemeral and Results

Sometimes tools may take the outputs from another tool as their input. These tools will be configured to run in a multistage pipeline after being added. The below fields tell Thorium what inputs a tool requires and where to download those inputs into before running the tool runs.

What are ephemeral files?

Ephemeral files are discarded at the end of a pipeline run. You might call these files intermediate artifacts of the pipeline. They can be passed between the images that run within a pipeline using the following configurations.

| Field | Description | Default |

|---|---|---|

| Location | Directory path to download ephemeral files into. | /tmp/thorium/ephemeral |

| Kwarg | Flag used to pass in name or path of each ephemeral file. If blank, positional args are used to pass in value. | None |

| Strategy | Whether to pass in path or name to the kwarg. Options are Path, Names or Disabled when no argument should be passed. | Path |

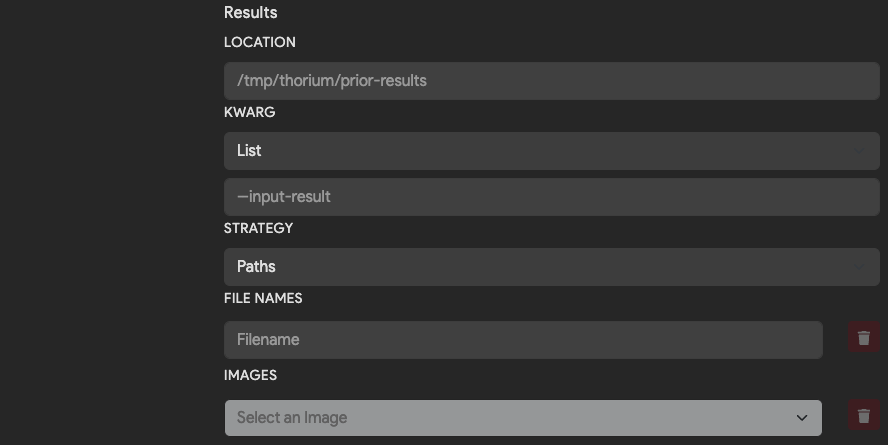

What are results?

Results are files that will be saved in Thorium after a tool runs. Unlike ephemeral files, the lifetime of a result is longer than the pipeline that generated it. You can view and download results in the Web UI or Thorctl any time after a tool runs.

| Field | Description | Default |

|---|---|---|

| Location | Directory path to download input results into. | /tmp/thorium/prior-results |

| Kwarg | Flag used to pass in name or path of each input result file. | None |

| Strategy | Whether to pass in path or name to the kwarg. Options are Path, Names or Disabled when no argument should be passed. | Path |

| File Names | Names of result files to download from the dependent tool results. | Empty |

| Images | Names of other tools that this image need results from to run. | Empty |

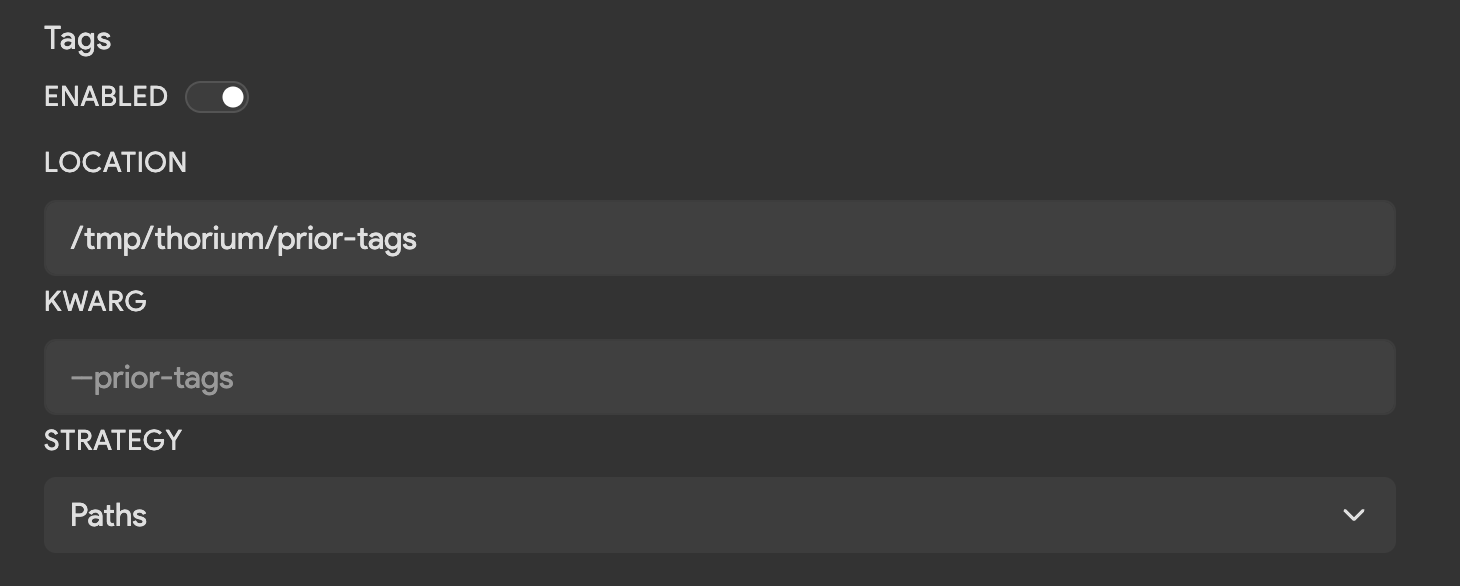

What are tag dependencies?

“Tags” in the context of dependencies refer to the Thorium tags of the sample or repo dependencies for a given reaction

(described above in Samples and Repos). This is useful when you have an image that can make decisions

based on tags set by other images in Thorium (or even by previous runs of the same image). For example, if you have an image

that is more effective at analyzing a sample if it knows the original language it was compiled from, you could add another image

earlier in the pipeline that attempts to tag the image with its language of origin (e.g. Language=Rust). Then, after enabling

tag dependencies for the analysis image, you can refer to the sample’s tags in your image to see which language was inferred

and analyze accordingly.

| Field | Description | Default |

|---|---|---|

| Location | Directory path to download sample/repo tags into. | /tmp/thorium/prior-tags |

| Kwarg | Flag used to pass in the name or path of each tags file. | None |

| Strategy | Whether to pass in the path or name to the kwarg. Options are Path, Names, Directory, or Disabled when no argument should be passed. | Path |

Tag files are by default saved to the directory /tmp/thorium/prior-tags and saved in the JSON format named as <SHA256>.json

for samples or organized into subdirectories by URL for repos (e.g. /github.com/project/repo.json). The following is an example of a

tag file:

{

"Language": [

"Rust",

],

"Strings": [

"Hello, world!",

"Goodbye",

"I'm a string 😎"

]

}

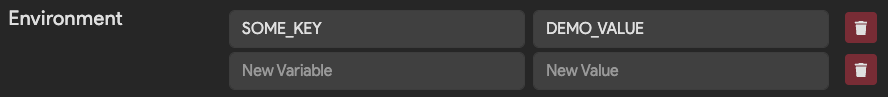

Environment Variables

(Optional)

Environment variables are dynamically set values that can affect the execution of a tool. Both Linux and Windows environments can have environment variables. Thorium will set any configured key/value environment variable pairs in the correct execution environment before an image runs. If your tool reads variables from the environment, it will be able to grab the key/value pair. A unique key is required when adding an environment variable. However, the value of that key can be empty/blank.

You can view the value of an environment variable on a Linux system using the following command:

echo $VARIABLE_NAME

VARIABLE_VALUE

This command may be useful when troubleshooting to confirm the environment variable is being set to the correct value when your tool runs.

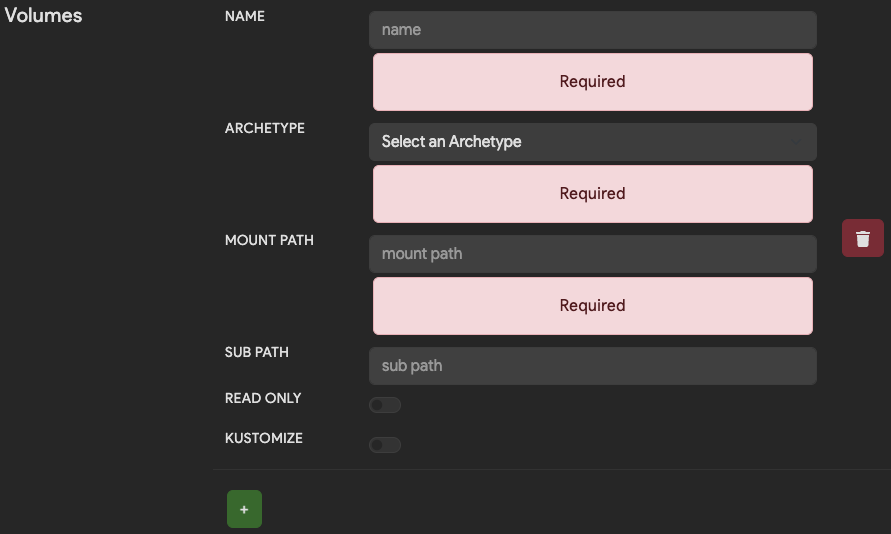

Volumes

(Optional, K8s images only)

Volumes are an advanced feature and are only relevant for tools that run in Kubernetes. Volumes in K8s can take the form of configuration files, secret files, host paths, or NFS shares. You can read more about K8s volumes in the K8s docs. If you think you need to map a volume into your K8s image you will need to reach out to an admin to have that volume setup within Thorium’s K8s instance.

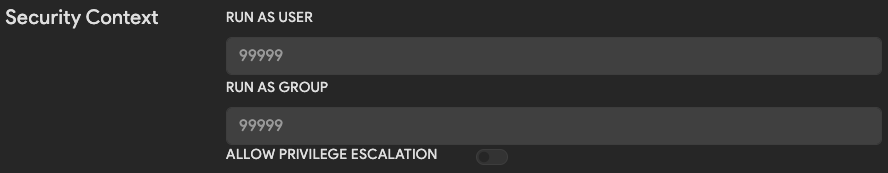

Security Context

(Optional, only admins can set these values)

By default Thorium runs all images as the user that requested them to run. This means that running tools have the effective user and group ID of a user rather than the developer that created the tool or some other privileged account. This helps to bound the abilities of tools that run in Thorium’s different execution environments. The security context values can be changed by admins to hard coded values that differ from these defaults. Note that the privilege escalation boolean setting is only relevant for images that run in K8s.

More on Children (Samples)

A sample submitted to Thorium as a result of running a Thorium reaction on another sample (the so-called “parent”) is called a “child.”

Origin Metadata for Children

Like any sample, children can have Origins to help identify where it came from later. Because children are submitted by the Thorium Agent automatically once a tool completes, it’s the tool’s responsibility to encode origin information by placing children in the origins’ respective directories (see the table in Output Collection) for the Agent to collect from.

In most cases, the Agent can infer origin metadata just from the placement of children by origin directory as well as from context

on how the Agent was run (e.g. which tool is running on which sample/repo). For example, the Agent can submit children with the Source

origin by collecting them from the source children directory (/tmp/thorium/children/source/ by default) and can infer metadata for

the Source origin – namely parent repo, commitish, flags, build system, etc. – just from the context of how the tool was run.

There are some cases, however, where the Agent cannot infer origin metadata beyond the origin type. These cases are detailed below.

Carved from PCAP

Thorium can save a lot of useful metadata about files carved from a PCAP (packet capture) sample beyond custom tags (see PCAP Origin for what kind of metadata can be saved). When manually uploading samples, it’s easy to add this information in the Web UI or Thorctl. When the Thorium Agent uploads children files, though, it needs a place to look to grab this information your tool may have extracted.

The special place the Thorium Agent looks is in the thorium_pcap_metadata.json file in the CarvedPCAP origin sub-directory

(/tmp/thorium/children/carved/pcap/ by default). This file should be a JSON map where the keys are children filenames (not absolute

paths) and the values are the metadata to encode. An example thorium_pcap_metadata.json file could look like:

{

"carved_from_pcap1.hmtl": {

"src": "1.1.1.1",

"dest": "2.2.2.2",

"src_port": 80,

"dest_port": 34250,

"proto": "TCP",

"url": "example.com"

},

"carved_from_pcap2.txt": {

"src": "3.3.3.3",

"dest": "4.4.4.4"

}

}

The table in PCAP Origin lists the fields each child file may have. The thorium_pcap_metadata.json file

is completely optional. If no metadata file is provided, all PCAP-carved children will still have the CarvedPCAP origin, just with no

metadata beyond the parent SHA256, the tool that carved out the file, as well as any custom tags your tool sets.

Building Pipelines

What are pipelines?